When ChatGPT was released in Nov. of 2022, it unleashed a plethora of new possibilities, both positive and negative, into the digital era. Instead of spending hours researching where to go on vacation, upon its release, ChatGPT could pop out not only a destination but also hotels, flight information and activities in mere milliseconds. In September of 2023, ChatGPT’s image generator went public, broadening its scope even further. Creating a dishonest photo or video might have taken hours before image and video generators. Now it takes seconds. Despite ChatGPT’s prevalence, other AI software such as Google’s Gemini and Microsoft’s Bing have also entered the market, among numerous others that fall in and out of fashion almost weekly. In the rapidly changing world of technology, it can be hard to keep up with and stay aware of online safety, while still exploring technology’s new advancements.

Cyberbullying:

A Pew Research Center report in 2022 found that 46% of high schoolers nationwide had experienced cyberbullying.

“It’s important to understand cyberbullying is just as impactful as someone coming up to your face and saying it,” Kaney-Francis said.

Kaney-Francis said even at smaller schools like Hockaday, cyberbullying can create divisions, both on and off campus.

“We have had many students bring forward examples of cyberbullying, and we are so glad students feel comfortable doing that,” Kaney Francis said. “A lot of times, it might take adult intervention to shut it down or to have those one-on-one conversations with the perpetrator.”

What allows cyberbullying to occur is the lack of accountability the internet provides for cyberbullies, which makes them feel like they can act maliciously without facing the consequences.

“Cyberbullies can do what they do because of the anonymity of the internet,” Ms. Townsley, the Upper School Technology Integration Specialist, said.

“One of the dangers of AI and social media is that it just continues to put walls between me and you,” Kaney-Francis said. “To stop some of this from building and perpetuating we need to remind the person perpetrating this that the people they are targeting are real.”

Kaney-Francis also notes that the anonymity of the digital landscape creates a false sense of safety to start hurtful, malicious conversations.

“It’s easy to say things online,” Kaney-Francis said. “It’s easy to misinterpret things. It’s also easy to disconnect ourselves from being fully responsible and owning the messages that we’re putting out there.”

Whether bullying occurs online or in-person, being a bystander is incriminating. This includes being a member of negative group chats, online forums, or participating in harmful comment chains.

“It’s important to ask yourself if you are in spaces where the theme of that space is to talk about another person or to poke fun at somebody to the point where that becomes harmful,” Kaney-Francis said, “It’s about using good judgment and discernment about the spaces that you’re active in.

Messages from online spaces are also much more permanent than spoken words. Something said online can follow a person for years to come, beyond high school or college.

“Nothing ever dies online,” Kaney-Francis said.

Rise of AI :

As an advocate for responsible A.I. use in schools, Upper School neuroscience teacher Dr. Katie Croft preaches transparency between students and administrators to help our communities better understand artificial intelligence.

“If people are scared to talk about it or don’t know what the school’s position is, then that’s going to lead to more problems than solutions,” Croft said.

She also noted different ways for students and teachers to work together to use A.I. in the classroom.

“A.I. can be a very powerful learning experience,” Croft said. “As long as we continue learning and having conversations about it.”

Her students are often participating in A.I. generated activities that foster a better understanding of content and open up conversations about the lack of originality that A.I can create.

Reflecting on how she incorporated Chat GPT into her lessons, Croft clarified the difference between using A.I. as a tool versus using it with dishonorable intent.

“It’s really not good at making decisions or judgements, but students can,” she said. “We as humans are able to integrate all that information and then decide, but that is what A.I. can’t do, and that’s what really separates us.”

A.I. Usage:

How to use artificial intelligence is not something that teachers can expect students, especially younger generations, to learn on their own. It is necessary, as the world changes, for curriculums to change with it.

“As a teacher, I think it’s really important to start discussing the ‘soft’ skills that we aren’t necessarily taught in school,” Croft said. “Developing empathy for other people and learning how to communicate well will become more important.”

Reminding students about the human emotions we feel can help younger generations separate A.I. from real interactions with people, and emphasize the non-human aspect of any software that uses A.I.

However, the use of A.I. continues to become more prominent as chatbots and A.I. software are being advertised as the technology of the future.

“Students are negatively affected by [A.I.] because they are afraid of it,” Townsley said. “They are afraid to use it, because teachers will think they are cheating if they use it.”

Students and teachers are currently wrestling to understand how they can incorporate AI in the classroom, while maintaining academic integrity. Meanwhile, leaders in technology are advising the public to learn how to use AI.

“If we don’t learn about AI we are going to get left behind,” Croft said.

Croft also said with AI’s rapid growth, maintaining accurate information is difficult.

“It’s really hard to stay up to date because of how fast AI is growing and changing,” Croft said.

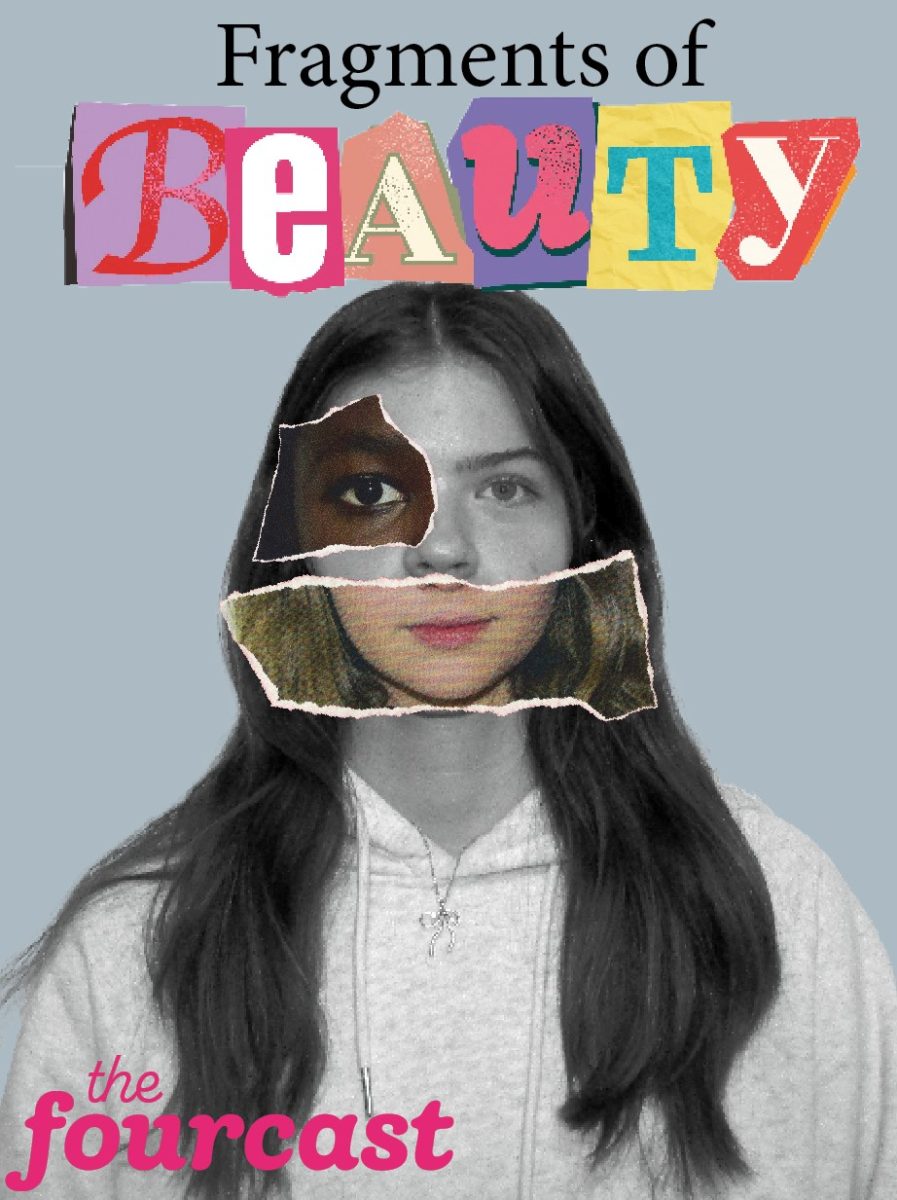

Deep Fakes :

Deep fakes and fake images created to hurt others are unfortunately nothing new. However, with the amount of editing apps and A.I. websites available to the public, creating a fake image has become easier than ever.

“It’s exponentially easier to create that content now,” Upper School neuroscience teacher Katie Croft said.“If we get to the point where there’s so much false content and we’re able to see that it’s false, I do think that could affect public trust.”?

As fabricated images and videos become commonplace in digital forums, Croft believes we must actively discuss and evaluate the risks they pose to our community.

“Hiding our heads under the sand is not the answer,” Croft said. “You can’t say ‘I know that’s happening over there, but we just have to keep doing our best.’”

The lack of knowledge about A.I. creates an environment where many believe false photos, audios and other forms of media are real regardless of the signs of artificial recreation.

“If people don’t have the knowledge and they don’t understand, then they will be the ones that will be duped,” Townsley said

While some cannot differentiate between true and false content, others choose to dismiss the correlation between harmful action and the limitless capabilities of A.I.

“People are ignorant about things, about A.I., about the use of deep fakes and voicefakes and all that,” Townsley said. “It is ignorance that causes fear, that is the root cause of cyberbullying, that is why knowledge is power because then you can make an informed decision.”

In order to maintain a safe environment, regulations surrounding media as well as rhetoric about A.I. need to foster a sense of security, so students feel comfortable reaching out to adults when they face challenges in the digital world.

“If we are going to protect our students and teach responsible use, I think we have a massive obligation to help you with online conflict and security as well,” Croft said.

With the creation of deep fakes becoming more common, both Croft and Townsley agree that teachers and administrators need to provide a safe environment where students feel able to talk about their experiences with fake images and content created online. Deep fakes should not become a sensitive or shameful topic, especially in an educational setting.

Social Media

Social media platforms like Instagram, TikTok and Snapchat draw huge portions of their audience from young adults and teenagers. Social media has become a huge part of communication, particularly for high school and college-aged students.

Upper School counselor Ellen Kaney-Francis said social media can be a force for good, as well as a source of stress. Kaney-Francis said social media provides repetition, which can be harmful for those dealing with negative thought patterns.

“If your mood has shifted because you are engaged in a certain part of social media, you need to ask yourself what thoughts that content is triggering and why that is happening,” Kaney-Francis said.

However, while some students may have negative experiences with social media, others turn to it for stress relief or relaxation. 72 responses in a study on social media from 2016 run by the Association for Information Systems noted that people turned to social networks for leisure.

“We have to be careful about not making blanket statements like ‘everyone is looking at things that make them feel bad about themselves’,” Kaney-Francis said.